Social Media’s Intolerance Death Spiral

We’ve all been on social media for far too long and it’s changed some of us. We started as starry-eyed enthusiasts: “surely the human race will be able recognize when I explain the One True Right Way of Doing Things” — whatever that might be — “so I’ll be nice to everyone as I’m helping them to reach the Light”. But now, when we read about hollow Earths or the Moon not existing for the 42nd time, we think “ugh, not this moron again”. And that’s the best-case scenario: we’ve seen examples of widespread harassment from people who, in principle, would propose philosophies of love and acceptance. It’s a curious effect, so it’s worthwhile to take a step back and ask ourselves: why does it happen?

This is what Camilla Westermann and I asked ourselves during her thesis project, which turned into the paper “A potential mechanism for low tolerance feedback loops in social media flagging systems,” published a couple of months ago on Plos One. We hypothesized there is a systemic issue: social media is structured in a way that leads people to quickly run out of tolerance. This is not a new idea: many people already pointed out that an indifferent algorithm sees “enragement” and thinks “engagement”, and thus it will actively recommend you the things most likely to make you mad, because anger will keep you on the platform.

While likely true, this is an incomplete explanation. Profiting off radicalization doesn’t sound… nice? Thus it might be bad for business on the long run — if people with pitchforks start knocking at the shiny glass door of your social media behemoth. So, virtually all mainstream platforms have put systems into place to limit the spread of inflammatory content: moderation, flagging, and the like. So why isn’t it working? Why is online discourse apparently becoming worse and worse?

Our proposed answer is that these moderation systems — even if implemented in good faith — are the symptom of a haphazard understanding of the problem. To make our case we created a simple Agent-Based Model. In it, people read content shared by their friends and flag it when it is too far away from their worldview. This is regulated by a tolerance parameter: the higher your tolerance, the more ideological distance a news item requires to trigger your flagging finger.

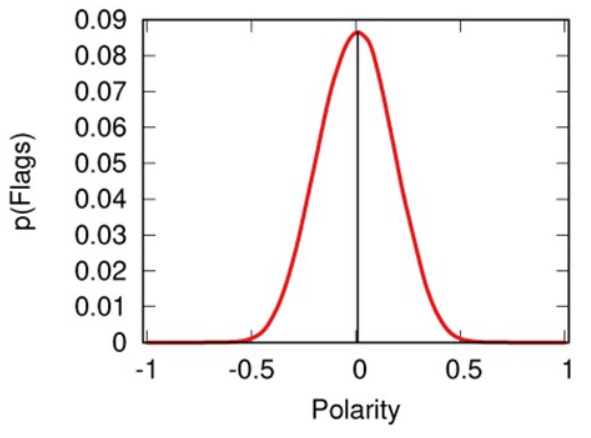

This is a model I already talked about in the past and its results were pretty bleak. From the picture above you can see that neutral news sources get flagged the most. This is due to the characteristics of real-world social media — echo chambers, confirmation bias, and the like. In the end, we punish content producers for being moderate.

The thing I didn’t say that time was that the model only shows that pattern for low values in the tolerance parameter. For high tolerance, things are pretty ok. So, if everyone started as a starry-eyed optimist, how did we end up with *gestures in the general direction of Twitter*?

Our explanation is made of a simple ingredient: people think they’re right and want to convince others to behave accordingly because it’s Good — “go to church more!”; “use the correct pronouns!” –, so they do whatever they think will achieve that objective.

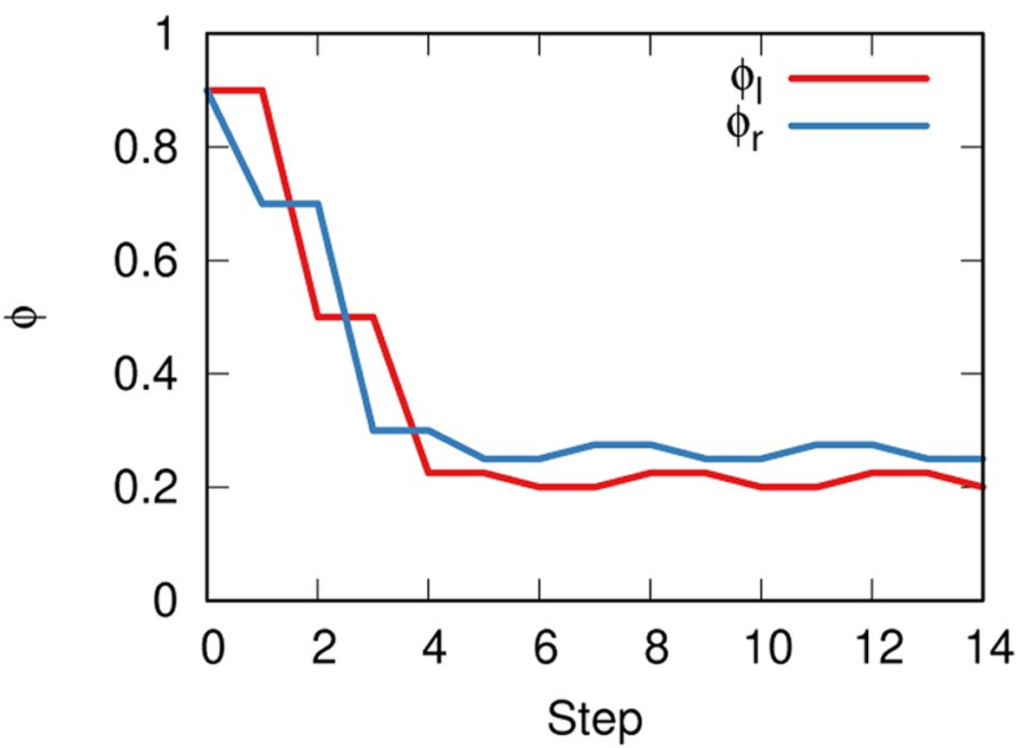

We started the model with the two sides having the same tolerance, set at very high levels, because we are incurable optimists. At each time step, one of the two sides will change their tolerance level. They will search for the tolerance level that will push news sources the most to their side — which, mind you, can also be a higher tolerance level, not necessarily a lower one.

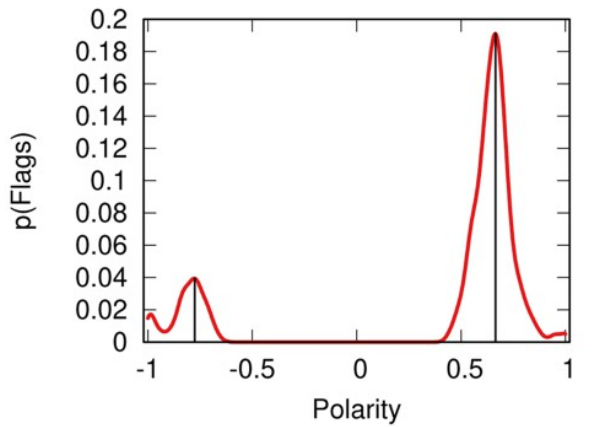

The image above shows that, in the beginning, lowering tolerance is a winnning strategy. The news sources on the more tolerant side get flagged more by the people from the other, less tolerant, side. Since they don’t like being flagged, they are incentivized to find whatever opinion that will minimize the number of flags received — see this other previous work. This happens to pull them to the intolerant side. The problem is that, in our model, no one wants to be a sucker. “If they are attracting people to their side by being intolerant, why can’t I?” is the subconscious mantra we see happening. An intolerance death spiral kicks in, where both sides progressively push the other to even lower tolerance levels, because… it just works.

This happens until the system stabilizes to a relatively low — but non-zero — level of tolerance. Below a certain level, intolerance is so high it doesn’t attract any more. Too low tolerance only repulses, because people would flag you anyway, so what would be the point of moving closer to the intolerant side?

Of course, this is only the result of a simulation, so it should be taken with the usual boatload of grains of salt. The real world is a much more complex place, with many different dynamics, and humans aren’t blind optimizers of functions[citation needed]. However, it is a simulation using more realistic starting conditions than what social media flagging systems assume, and the low tolerance value for the parameter happens to be extremely close to our best guess estimation of what it is consistent with observed data. So ours might be a guess, but at least it’s decently educated.

What can we take from this research? If you own a social media platform, the advice would be not to implement poorly-thought-out flagging moderation systems: create models with more realistic assumptions (like ours) and use them to guide your solutions. Otherwise, you might be making the problem worse.

And if you’re a regular user? Well, maybe sometimes, being nice is better than making your side win. I’m looking forward to read on Twitter what some people think about this philosophy. I’m sure it will go great.