Memetics, or: How I can spend my entire day on Reddit claiming that I’m working

In his 1976 book “The Selfish Gene“, Richard Dawkins proposed a shift in the way we look at evolution: instead of considering the organisms as the center of evolution, Dawkins proposed (providing tons of evidence) to consider single genes as the fundamental evolution unit. I am not a biologist nor interested in genetics, so this idea should not concern me. However, Dawkins added one chapter to his book. He felt that it could be possible that culture, too, is made out of self-replicating units, just like genes, that can compete and/or collaborate with each other in forming “cultural organisms”. He decided to call these units “memes”.

The idea of memes was mostly in the realm of intellectual and serious researchers (not like me); you can check out some pretty serious books like “Metamagical Themas” by Hofstadter or “Thought Contagion: How Belief Spreads Through Society” by Lynch. But then something terrible was brought to the world. Then, the World Wide Web happened, bringing with itself a nexus of inside jokes, large communities, mind hives, social media, 2.0s, God knows what. Oh and cats. Have one, please:

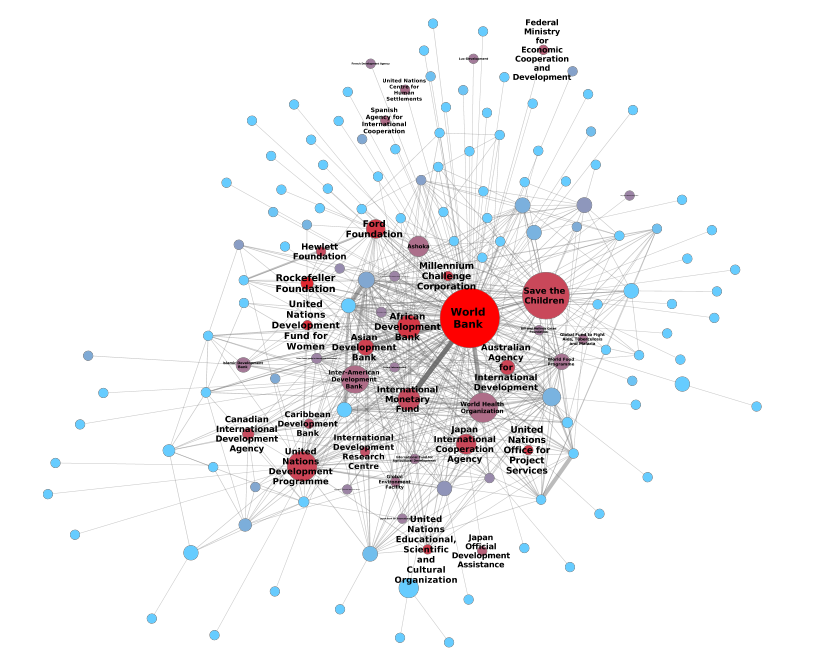

With the WWW, studying memes became easier, because on the Internet every piece of information has to be stored somehow somewhere. This is not something I discovered by myself, there are plenty of smart guys out there doing marvelous research. I’ll give just three examples out of possibly tens or hundreds:

- Studies about memes competing for the attention of people in a social network like “Clash of the contagions: Cooperation and competition in information diffusion” or “Competition among memes in a world with limited attention” ;

- Studies about the adoption of conventions and behaviors by people, like “The emergence of conventions in online social networks”or “Cooperative behavior cascades in human social networks”;

- Studies about how information diffuses in networks, like “Virality and susceptibility in information diffusions” or “Mining the temporal dimension of the information propagation” which, absolutely incidentally, is a paper of mine.

There is one thing that I find to be mostly missing in the current state of the research on memes. Many, if not all, of the above mentioned works are focused in understanding how memes spread from one person to another and they ask what the dynamics are, given that human minds are connected through a social network. In other words, what we have been studying is mostly the network of connections, regardless of what kinds of messages are passing through it. Now, most of the time these “messages” are about penguins that don’t know how to talk to girls:

and in that case I give you that you can fairly ignore it. But my reasoning is that if we want to really understand memes and memetics, we can’t put all of our effort in just analyzing the networks they live in. It is like trying to understand genes and animals and analyzing only the environment they inhabit. If you want to know how to behave in front of a “tiger” without ever having met one, it is possibly useful to understand something about the forest it is dwelling in, but I strongly advise you to also take a look at its claws, teeth and how fast it can run or climb.

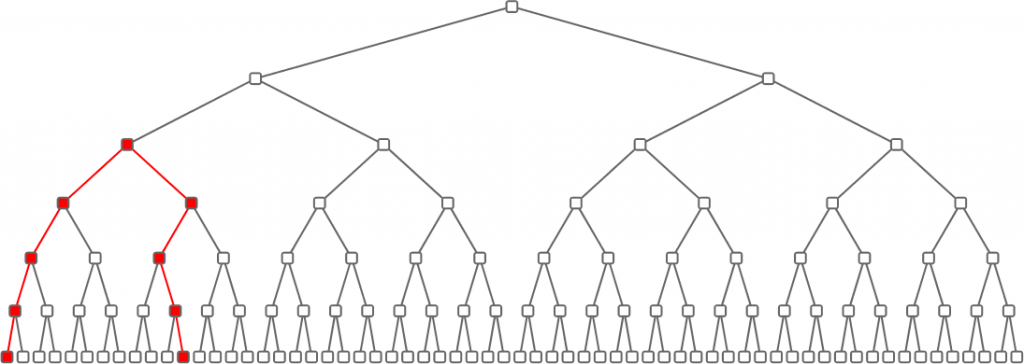

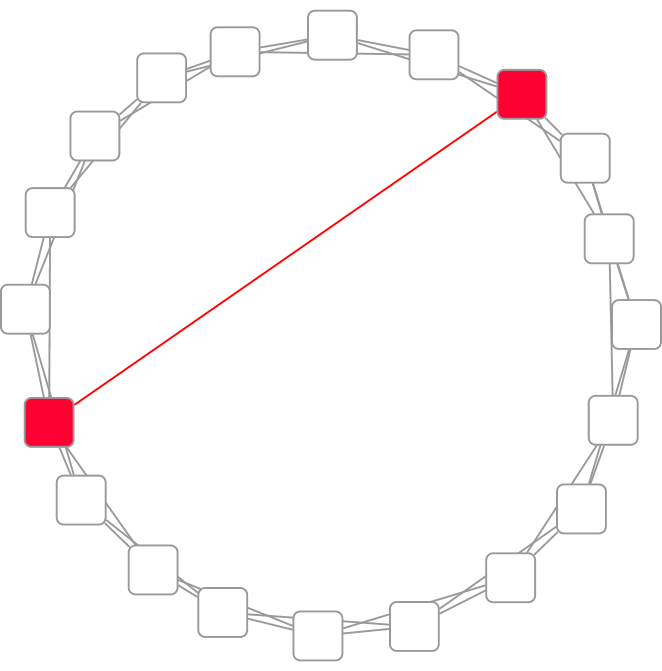

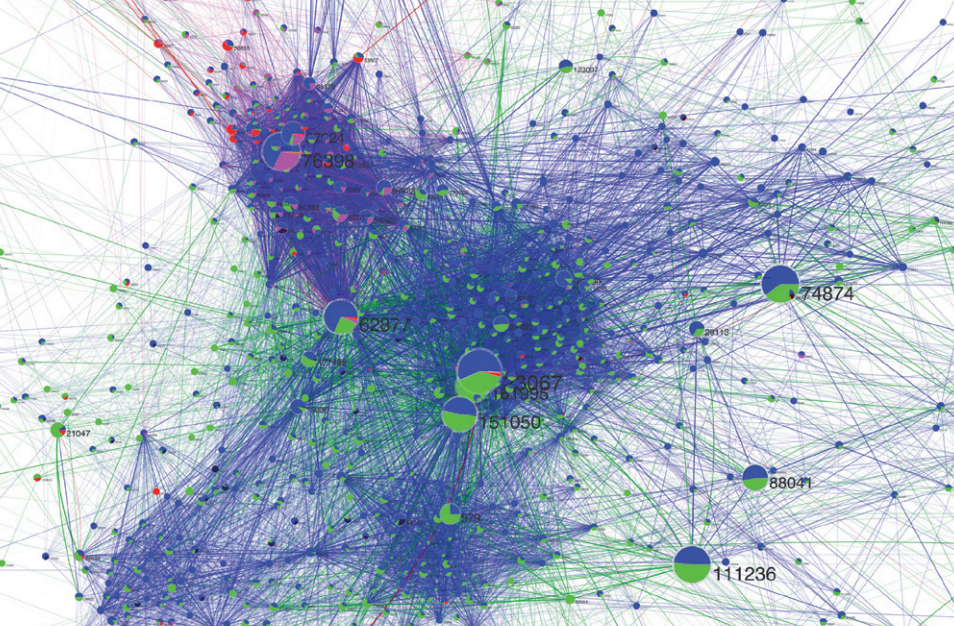

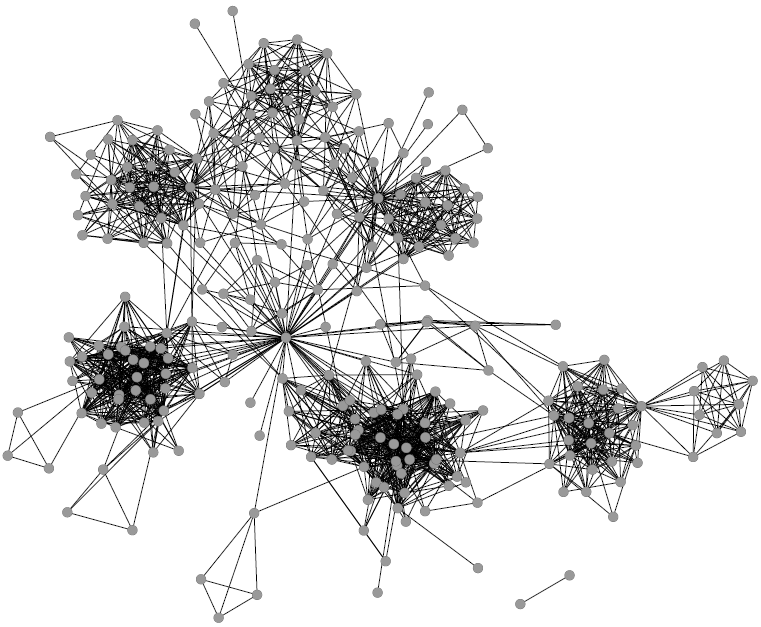

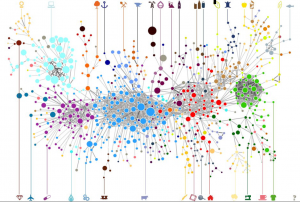

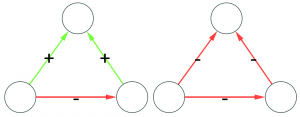

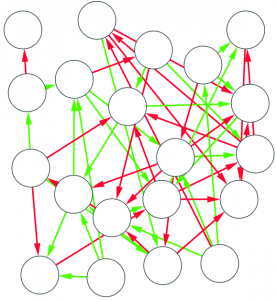

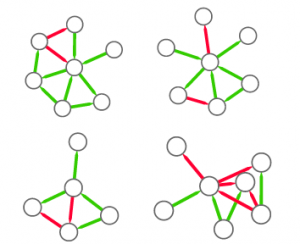

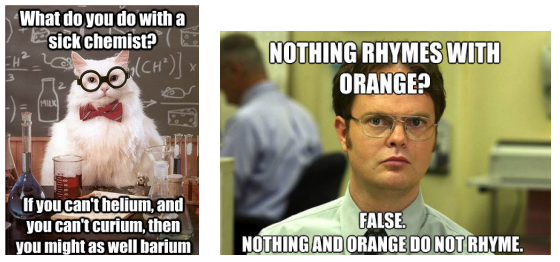

That is exactly what I study in a paper that I got accepted at the ICWSM conference, titled “Competition and Success in the Meme Pool: a Case Study on Quickmeme.com” (click to download). What I did was fairly simple: I downloaded a bunch of memes from Quickmeme.com and I studied the patterns of their appearances and upvotes across a year worth of data. Using some boring data analysis techniques borrowed from ecology, I was able to understand which memes compete (or collaborate) with which other ones, what are the characteristics of memes that make them more likely to survive and whether there are hints as the existence of “meme organisms” (there are. One of my favorites is the small nerd-humor cluster:

).

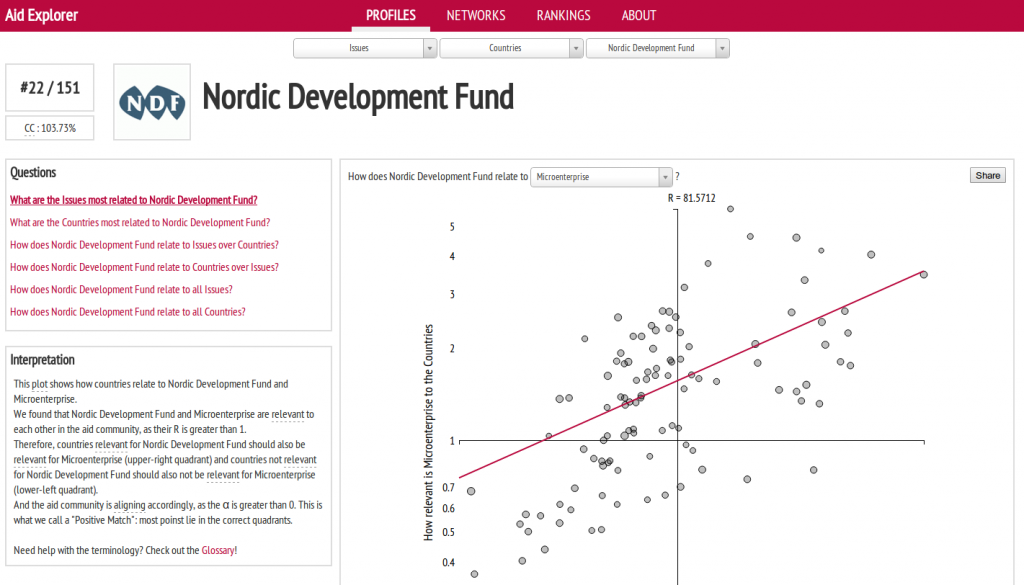

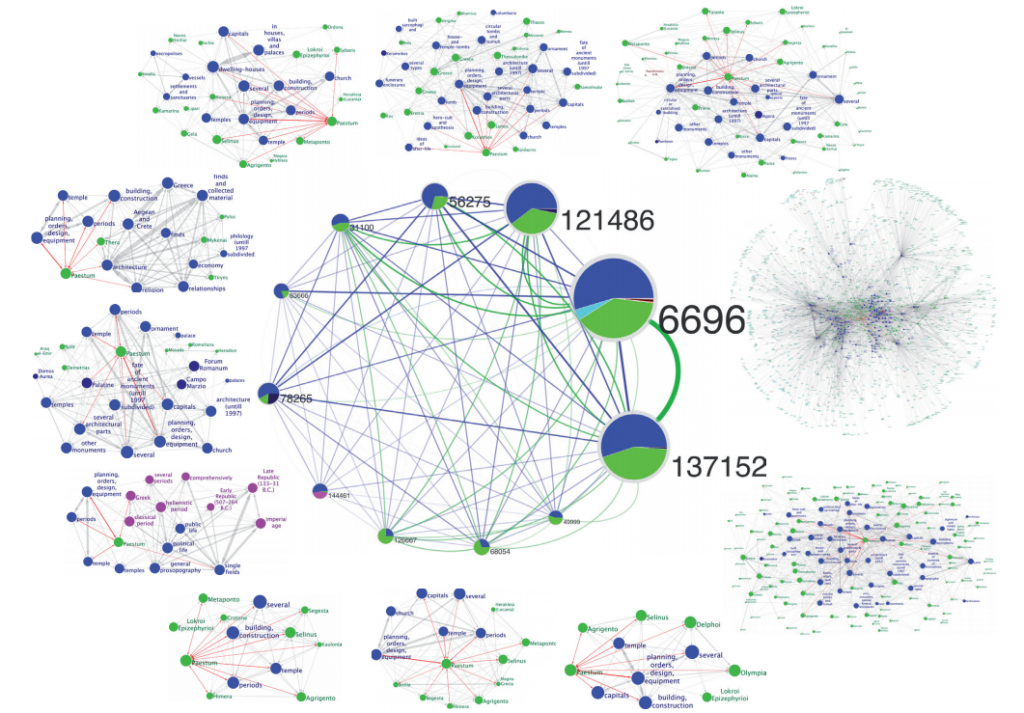

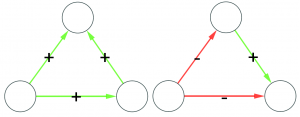

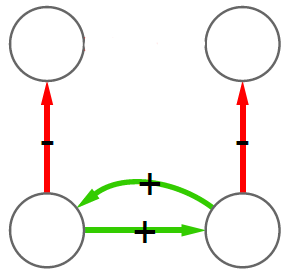

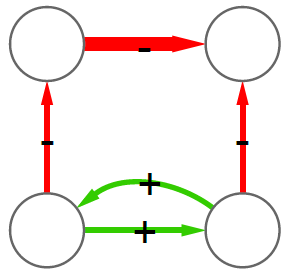

One of the nicest products of my paper was a simple visualization to help us understand the effect of some of the characteristics of memes that are associated with successful memes. As characteristics I took the number of memes in competition and in collaboration with the meme, whether the meme is part of a coherent group of memes (an “organism”) and if the meme had a very large popularity peak or not. The result, in the picture below (click to enlarge), tells us an interesting story. In the picture, the odds of success are connected by arrows that represent the filters I used to group the memes, based on their characteristics.

This picture is saying: in general, memes have a 35.47% probability of being successful (given the definition of “successful” I gave in the paper). If a meme has a popularity peak that is larger than the average, then its probability of success decreases. This means that, my dear meme*, if you want to survive you have to keep a low profile. And, if you really can’t keep a low profile, then don’t make too many enemies (or your odds will go down to 6.25%). On the other hand, if you kept a low profile, then make as many enemies as you can, but only if you can count on many friends too, especially if you can be in a tightly connected meme organism (80.3%!). This is an exciting result that seems to suggest that memes are indeed collaborating together in complex cultural organisms because that’s how they can survive.

What I did was just scratching the surface of meme-centered studies, as opposed to the network-centered meme studies. I am planning to study more deeply the causal effect between a meme and its fitness to survive in the World Wild Web and to understand the mechanics of how memes evolve and mutate. Oh, and if you feel like, I am also releasing the data that I collected for my study. It is in the “Quickmeme” entry under the Datasets tab (link for the lazies).

* I deeply apologize to Dawkins, any readers (luckily they are few) and to the scientific community as a whole, for my personification of memes. I know that memes have not a mind, therefore they can’t “decide” to do anything, but it really makes it so much easier to write!

I am an associate prof at IT University of Copenhagen. I mainly work on algorithms for the analysis of complex networks, and on applying the extracted knowledge to a variety of problems.

My background is in Digital Humanities, i.e. the connection between the unstructured knowledge and the coldness of computer science.

I have a PhD in Computer Science, obtained in June 2012 at the University of Pisa. In the past, I visited Barabasi's CCNR at Northeastern University, and worked for 6 years at CID, Harvard University.

I am an associate prof at IT University of Copenhagen. I mainly work on algorithms for the analysis of complex networks, and on applying the extracted knowledge to a variety of problems.

My background is in Digital Humanities, i.e. the connection between the unstructured knowledge and the coldness of computer science.

I have a PhD in Computer Science, obtained in June 2012 at the University of Pisa. In the past, I visited Barabasi's CCNR at Northeastern University, and worked for 6 years at CID, Harvard University.